I’ve been posting lots of links to uncertainty quantification (UQ) references lately in comments (eg here, here, here), but I don’t really have a post dedicated only to UQ. So I figured I’d remedy that situaton. This post will be a simple example problem based on methods presented in some useful recent papers on UQ (along with a little twist of my own):

- A good overview of several of the related UQ methods [1]

- Discussion of some of the sampling approaches for high dimensional random spaces, along with specifics about the method for CFD [2]

- Comparison of speed-up over naive Monte Carlo sampling approaches [3]

- A worked example of stochastic collocation for a simple ocean model [4]

The example will be based on a nonlinear function of a single variable

| (1) |

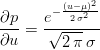

The first step is to define a probability distribution for our input (Gaussian in this case, shown in Figure 1).

| (2) |

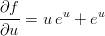

Then, since our example is a simple function, we can analytically calculate the resulting probability density for the output. To do that we need the derivative of the model with respect to the input (in the multi-variate case we’d need the Jacobian).

| (3) |

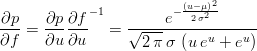

Then we divide the input density by the slope to get the new output density.

| (4) |

We’ll use equation 4 to measure the convergence of our collocation method.

The idea of stochastic collocation methods is that the points which the model (equation 1 in our example) is evaluated at are chosen so that they are orthogonal with the probability distributions on the inputs as a weighting function. For normally distributed inputs this results in choosing points at the roots of the Hermite polynomials. Luckily, Scipy has a nice set of special functions, which includes he_roots to give us the roots of the Hermite polynomials. What we really want is not the function evaluation at the collocation points, but the slope at the collocation points. One way to get this information in a nearly non-intrusive way is to use a complex step method (this is that little twist I mentioned). So, once we have values for this slope at the collocation points we fit a polynomial to those points and use this polynomial as a surrogate for equation 3. Figure 2 shows the results for several orders of surrogate slopes.

The convergence of the method is shown in Figure 3. For low dimensional random spaces (only a few random inputs) this method will be much more efficient than random sampling. Eventually the curse of dimensionality takes its toll though, and random sampling becomes the faster approach.

The cool thing about this method is that the complex step method which I used to estimate the Jacobian is also useful for sensitivity quantification which can be used for design optimization as well. On a related note, Dan Hughes has a post describing an interesting approach to bounding uncertainties in the output of a calculation given bounds on the inputs by using interval arithmetic.

References

[1] Loeven, G.J.A., Witteveen, J.A.S., Bijl, H., Probabilistic Collocation: An Efficient Non-Intrusive Approach For Arbitrarily Distributed Parametric Uncertainties, 45th Aerospace Sciences Meeting, Reno, NV, 2007.

[2] Hosder, S., Walters, R.W., Non-Intrusive Polynomial Chaos Methods for Uncertainty Quantification in Fluid Dynamics, 48th Aerospace Sciences Meeting, Orlando, FL, 2010.

[3] Xiu, D., Fast Numerical Methods for Stochastic Computations, Comm. Comp. Phys., 2009.

[4] Webster, M., Tatang, M.A., McRae, G.J., Application of the Probabilistic Collocation Method for an Uncertainty Analysis of a Simple Ocean Model, MIT JPSPGC Report 4, 2000.

The Computational Chemodynamics Lab at Rutgers has some interesting stuff on stochastic collocation or polynomial chaos expansions, here's one of the abstracts:

ReplyDeleteThe Stochastic Response Surface Method (SRSM) is a recently developed, computationally efficient technique for uncertainty propagation through numerical computational models. The SRSM provides an alternative to traditional techniques such as Monte Carlo and Latin Hypercube Sampling by approximating model outputs as probabilistic response surfaces involving orthogonal polynomials of standard random variables; this approximation is also known as the polynomial chaos expansion. The coefficients of the polynomial approximation are estimated from the model outputs at a set of sample points either via collocation or regression techniques. These coefficients provide combined sensitivity and uncertainty information of model outputs with respect to model inputs. This helps in identifying those inputs that contribute to the output uncertainties the most, thus allowing for prioritizing further studies and data gathering efforts. The application of the SRSM so far has involved prior (a priori) selection of collocation or regression points in order to estimate the polynomial chaos expansion coefficients. The current work presents algorithmic improvements to the SRSM by utilizing Bayesian techniques for estimating the coefficients of polynomial expansion. The improvements to the SRSM are implemented as modules of the MENTOR system (Modeling ENvironment for TOtal Risk studies), and the new SRSM modules are evaluated from the perspective of (a) estimating output uncertainties, (b) assessing contributions of individual input uncertainties, (c) computational efficiency, and (d) ability to utilize outputs from the available model runs. A comparative evaluation of the SRSM with regression and Bayesian techniques is presented for different environmental models with a diverse set of probability distributions for the model inputs including simple mixture distributions.

UQ is critically important when it comes to informing policy:

ReplyDeleteCharacterization of uncertainty associated with transport-transformation models is often of critical importance, as for example in cases where environmental and biological models are employed in risk assessment. However, uncertainty analysis using conventional methods such as standard Monte Carlo or Latin Hypercube Sampling may not be efficient, or even feasible, for complex, computationally demanding models.

This work introduces a computationally efficient alternative method for uncertainty propagation, the Stochastic Response Surface Method (SRSM). The SRSM approximates uncertainties in model outputs through a series expansion in normal random variables (polynomial chaos expansion). The unknown coefficients in series expansions are calculated using a limited number of model simulations. This method is analogous to approximation of a deterministic system by an algebraic response surface.

Rather than use a complex-step method like I did above, they use automatic differentiation:

Further improvements in the computational efficiency of the SRSM are accomplished by coupling the SRSM with ADIFOR, which facilitates automatic calculation of partial derivatives in numerical models coded in Fortran. The coupled method, SRSM-ADIFOR, uses the model outputs and their derivatives to calculate the un-known coefficients.

Computational Methods for the Efficient Sensitivity and Uncertainty Analysis of Models for Environmental and Biological Systems

George,

ReplyDeleteThanks for the article; it's a good one.

I think we can do a few things to keep our friends 'faithful':

Pick the points well, we get into trouble pretty quick with equidistant points.

Pick a nice orthogonal basis, which turn out to be the ones we need to get rapid convergence for this application anyway (particular family depends on the input probability distributions).

The other thing is to do a 'validation point' to compare a prediction from the response surface to a new model evaluation and see if it is within an acceptable tolerance (they do this in that ocean model example).

If all else fails, we can do a least squares approximation instead of exact interpolation, which is usually better conditioned (with a good algorithm like QR). In this example since we pick the original set of points at the zeros of a certain order of Hermite polynomial, if we do an additional validation point it won't be at a zero, so doing least squares rather than interpolation on this new expanded set of points might make sense.

Should I be leery here?

Yes, we can calculate everything... some of it might even be right.

In this paper (Uncertainty in Emissions Projections for Climate Models), they use one of the next higher order zeros as a validation point so that it will be available if the currently used expansion is unacceptable. That makes sense, but if you do decide to go up to the next higher order, should you throw away all the previous points (since they aren't at the new zeros) or use them (since they were hard-earned info)?

ReplyDeleteInteresting upcoming keynote (26 Mar 10) as part of UM's Complex Fluids and Complex Flows,

ReplyDeleteTitle: Abrupt climate change and climate variability: When data fail us

Abstract: We are familiar with the controversy regarding global warming and its social and environmental implications, much less so about why these controversies arise. I will describe the role played by mathematics in climate research and will discuss how mathematics plays a central role in answering one of the toughest technical challenges posed by the Intergovernmental Panel on Climate Change (IPCC 2007) report: How confident are we about predictions of future climate scenarios? In this university-level talk, I will describe why it is so difficult to pin down uncertainties in climate variability and will highlight some of the mathematical tools being developed by The Uncertainty Quantification Group and others, to tackle this question.

What's the time-constant for the 'abrupt' behavior?

This paper has a couple simple examples worked out:

ReplyDeleteA Stochastic Collocation Algorithm for Uncertainty Analysis

Abstract:This report describes a stochastic collocation method to adequately handle a physically intrinsic uncertainty in the variables of a numerical simulation. For instance, while the standard Galerkin approach to Polynonfial Chaos requires multi-dimensional summations over the stochastic basis functions, the stochastic collocation method enables to collapse those summations to a one-dimensional summation only. This report furnishes the essential algorithmic details of the new stochastic collocation method and provides as a numerical example the solution of the Riemann problem with the stochastic collocation method used for the discretization of the stochastic parameters.

This paper is about using stochastic collocation as part of a Bayesian inference procedure for inverse problems: Stochastic Collocation Approach to Bayesian Inference in Inverse Problems

Abstract: We present an efficient numerical strategy for the Bayesian solution of inverse problems. Stochastic collocation methods, based on generalized polynomial chaos (gPC), are used to construct a polynomial approximation of the forward solution over the support of the prior distribution. This approximation then defines a surrogate posterior probability density that can be evaluated repeatedly at minimal computational cost. The ability to simulate a large number of samples from the posterior distribution results in very accurate estimates of the inverse solution and its associated uncertainty. Combined with high accuracy of the gPC-based forward solver, the new algorithm can provide great efficiency in practical applications. A rigorous error analysis of the algorithm is conducted, where we establish convergence of the approximate posterior to the true posterior and obtain an estimate of the convergence rate. It is proved that fast (exponential) convergence of the gPC forward solution yields similarly fast (exponential) convergence of the posterior. The numerical strategy and the predicted convergence rates are then demonstrated on nonlinear inverse problems of varying smoothness and dimension.

Key words: Inverse problems, Bayesian inference, stochastic collocation, generalized polynomial chaos, uncertainty quantification.

Of particular note:

The gPC stochastic Galerkin approach has also been extended to Bayesian inference of spatially-distributed quantities, such as inhomogeneous material properties appearing as coefficients in a PDE [16].

Lots of interesting papers here on Dongxiao Zhang's site. They are mostly focused on ground water and petroleum flows and data assimilation for modeling those things; the recent ones are on combining stochastic collocation methods with Kalman filters.

ReplyDeleteUQ is hot world-wide: Ph.D positions at the Seminar for Applied Mathematics (SAM), The research activity of both students will be in the area of Uncertainty Quantification (UQ) for partial differential equations. Particular attention will be paid to hyperbolic systems of conservation laws, in particluar shallow water equations, Euler equations of gas dynamics and equations of MagnetoHydroDynamics (MHD).

ReplyDeleteAn efficient Bayesian inference approach to inverse problems based on an adaptive sparse grid collocation method, using methods developed for UQ to do Bayesian inference for solving inverse problems, this one has a good review of relevant alternative techniques upfront. The key difference between this and the other similar paper linked above is the dimension of the stochastic space. The previously linked paper addresses low-dimension unknown spaces in the examples; this paper uses an adaptive Smolyak algorithm to tackle the curse of dimensionality faced by inverse problems with multi-dimensional PDEs as the forward models (very high dimension stochastic space).

ReplyDeleteAbstract: A new approach to modeling inverse problems using a Bayesian inference method is introduced. The Bayesian approach considers the unknown parameters as random variables and seeks the probabilistic distribution of the unknowns. By introducing the concept of the stochastic prior state space to the Bayesian formulation, we reformulate the deterministic forward problem as a stochastic one. The adaptive hierarchical sparse grid collocation (ASGC) method is used for constructing an interpolant to the solution of the forward model in this prior space which is large enough to capture all the variability/uncertainty in the posterior distribution of the unknown parameters. This solution can be considered as a function of the random unknowns and serves as a stochastic surrogate model for the likelihood calculation. Hierarchical Bayesian formulation is used to derive the posterior probability density function (PPDF). The spatial model is represented as a convolution of a smooth kernel and a Markov random field. The state space of the PPDF is explored using Markov chain Monte Carlo algorithms to obtain statistics of the unknowns. The likelihood calculation is performed by directly sampling the approximate stochastic solution obtained through the ASGC method. The technique is assessed on two nonlinear inverse problems: source inversion and permeability estimation in flow through porous media.

A low level introduction to high dimensional sparse grids has a thesis, paper and presentation slides on sparse grids.

ReplyDeleteOn Nuit Blanche: High Dimensional Problems and Solutions

ReplyDeleteIn this post I used the sensitivity derivative of the function with respect to the random variable to do the transformation from random input to random output. This info can also be used to increase the efficiency of a more standard Monte Carlo sampling approach:

ReplyDeleteFor a fixed number of samples, there is typically an order of magnitude reduction in the error achieved by the sensitivity derivative-enhanced sampling (SDES) approach. Equivalently, SDES computations require two orders of magnitude fewer samples to achieve the same accuracy in the moments compared with baseline Monte Carlo and stratified sampling schemes. The improvement afforded by the SDES approach appears to increase as the number of random variables increases. The overhead for the extra sensitivity derivative calculations is less than 5%...

Exploitation of Sensitivity Derivatives for Improving Sampling Methods

A more precise definition of UQ is the end-to-end study of the reliability of scientific inferences.

ReplyDeleteScientific Grand Challenges for National Security: The role of computing at the extreme scale.

Turns out, using the sensitivity derivatives (what I calculated with the complex-step method in this post), for stochastic problems helps with sampling too, the Abstract:

ReplyDeleteThis paper establishes a general theoretical framework for variance reduction based on arbitrary order derivatives of the solution with respect to the random parameters, known as sensitivity derivatives. The theoretical results are validated by two examples--the solution of the Burgers equation with viscosity as a single random parameter, and a test case involving five random variables. These examples illustrate that the first-order sensitivity derivative variance reduction method achieves an order of magnitude improvement in accuracy for both Monte Carlo and stratified sampling schemes. The second-order sensitivity derivative method improves the accuracy by another order of magnitude relative to the first-order method. Coupling it with stratified sampling yields yet another order of magnitude improvement in accuracy.

Modeling of physical systems underspecified by data

ReplyDeleteOutline

1. Physical systems & Stochastic PDEs

2. Data-Driven Domain Decompositions (D4) for SPDEs

3. Implementation of D4

(a) Data analysis & image segmentation

(b) Closure approximations for SPDEs

(c) PDEs on random domains

4. Effective parameters for heterogeneous composites

5. Conclusions

Direct Reduction of SPDEs through the stochastic transformation method “Spectral Polynomial Chaos Solutions of the Stochastic Advection Equation”. Some History… ... Multi-element probabilistic collocation method (ME-PCM) ...mpdc.mae.cornell.edu/.../Karniadakis-Presentation.pdf

ReplyDeleteUncertainty Quantification in Computational Models a presentation given at the Workshop on Understanding Climate Change from Data (UCC11), which is apparently an "expedition in computing", sounds fun...

ReplyDeleteExtensive set of slides for an introduction to modern UQ methods, polynomial chaos and stochastic collocation.

ReplyDeleteThe PSUADE Uncertainty Quantification Project: PSUADE is a acronym for Problem Solving environment for Uncertainty Analysis and Design Exploration. It is a software toolkit to facilitate the UQ tasks ...

ReplyDeleteNice animated graphic showing an adaptive stochastic collocation approach.

ReplyDeleteI find this short article to be really interesting and insightful since I have not heard such an explanation of the stochastic collocation method before (and, to be honest, the complex-step trick either); although, I am reading the literature on uncertainty quantification all the time. Can you please provide some links where I can clarify for myself this part "we really want [...] the slope at the collocation points" and what follows it? I am familiar with [1]-[4], but, it seems, I am still missing something. Thank you.

ReplyDeleteIvan, thanks for your comment. If you google terms like probability Jacobian transformation you should find lots of slides/tutorials/lesson notes. That's what we're doing here. The Jacobian in this simple 1-D problem is just the slope we've estimated using the complex step method.

ReplyDeleteYou don't have to do it this way. Lots of folks just use the function values to fit a polynomial, and then use this as a surrogate. It's faster to do Monte Carlo with a polynomial rather than the full model.