First off, thanks to

Steve Easterbrook for pointing at a

new report on ensemble climate predictions. This post is some of my thoughts on that report.

Here's an interesting snippet from the first section of the report:

Within the last decade the causal link between increasing concentrations of anthropogenic greenhouse gases in the atmosphere and the observed changes in temperature has

been scientifically established.

From a nice little summary of

how to establish causation:

C. Establishing causation: The best method for establishing causation is an experiment, but many times that is not ethically or practically possible (e.g., smoking and cancer, education and earnings). The main strategy for learning about causation when we can’t do an experiment is to consider all lurking variables you can think of and look at how Y is associated with X when the lurking variables are held “fixed.”

D. Criteria for establishing causation without an experiment: The following criteria make causation more credible when we cannot do an experiment.

(i) The association is strong.

(ii) The association is consistent.

(iii) Higher doses are associated with

stronger responses.

(iv) The alleged cause precedes the

effect in time.

(v) The alleged cause is plausible.

The point being, if you

can't do experiments, the causal link you establish will always be a rather contingent one (and if your

population of 'lurkers' is only 4 or 5 then perhaps we aren't to the point of exhausting our imaginations yet). I say that not to be disingenuous and sow doubt unnecessarily, but merely to show that I have an honest place to stand in my skepticism (I'd like to head off the "you're a willfully ignorant pseudo-scientific jerk" sorts of flames that seem to be popular in discussions on this topic).

That little digression aside, the specific aim of the work is to

develop an ensemble prediction system for climate change based on the principal state-of-the-art, high-resolution, global and regional Earth system models developed in Europe, validated against quality-controlled, high-resolution gridded datasets for Europe, to produce for the first time an objective probabilistic estimate of uncertainty in future climate at the seasonal to decadal and longer time-scales;

A side-note on climate alarmism: If the quality of the body of knowledge was such that it demanded action NOW! Then this would not have been the first such study. Rational decision support requires these sorts of uncertainty quantification efforts, it is totally irresponsible to demand political action without them.

The improvements for example, add skill to seasonal forecasting while multi-decadal models, for the first time, have produced probabilistic climate change projections for Europe.

Again, now that these projections have been made for the first time, we could actually attempt to validate them. I use that term in the technical sense of comparing a model's predictions to innovative experimental results. Since we can't do experiments on the earth (

or can we?) we have to settle for either

not validating, or validating by comparing the predictions to what actually happens. I realize that would take a couple decades to make useful quantitative comparisons. But think about this, if

we've already bought a millenia of warming then can't we spend a decade or two to build the credibility in our tool-set which we'll be using to 'fly' the climate into the future for centuries to come? The fact that this set of ensemble results claims to be skillfull at the decadal time-scales would actually make the validation task a quicker one than it would be with less accurate models because you've taken some of the 'noise' and explained it with your model.

I got really excited about this, it sounds promising:

The multi-model ensemble builds on the experience of previous projects where it has been shown to be a successful method to improve the skill of seasonal forecasts from individual models. The perturbed parameter approach reflects uncertainty in physical model parameters, while the newly developed stochastic physics methodology represents uncertainty due to inherent errors in model parameterisations and to the unavoidably finite resolution of the models.

Then I got to this:

These results illustrate that initialised decadal forecasts have the potential to provide improved information compared with traditional climate change projections, but the optimal strategy for building improved decadal prediction systems in the presence of model biases remains an open question for future work.

Which reflects my impression of the state of the art from my little mini-lit review on

Bayes Model Averaging. That's the fundamental difficulty, isn't it?

This is the sort of thing that worries folks who are used to being able to

draw a bright line between calibration and validation:

The ENSEMBLES gridded observation data set was used along with other datasets to verify and calibrate both global and regional models, and also to assess the uncertainties in model response to anthropogenic forcing.

Recall

Kelvin,

Conditional PDFs, which encompass the sampled uncertainty, were constructed from the statistically and dynamically downscaled output (and from GCM output) for temperature and/or precipitation for a number of areas and points. These are, however, qualitative constructions.

There's still some work to do here to make the product more useful for decision makers (quantitative rather than qualitative). I think the

polynomial chaos expansion approaches being explored in the uncertainty quantification community have a lot of promise here (3 or 4 orders of magnitude speed-up over standard Monte Carlo approaches). The other

slight difficulty with this approach is that these

qualitative PDFs were then used as inputs into the impact assessments.

In light of the

previous ensembles post and linked discussion thread, I found this snippet interesting:

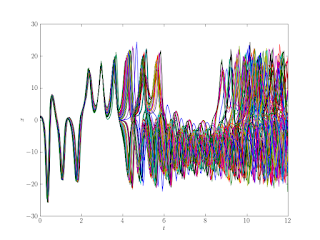

The non-linear nature of the climate system makes dynamical climate forecasts sensitive to uncertainty in both the initial state and the model used for their formulation. Uncertainties in the initial conditions are accounted for by generating an ensemble from slightly different atmospheric and ocean analyses. Uncertainty in model formulation arises due to the inability of dynamical models of climate to simulate every single aspect of the climate system with arbitrary detail. Climate models have limited spatial and temporal resolution, so that physical processes that are active at smaller scales (e.g., convection, orographic wave drag, cloud physics, mixing) must be parameterised using semi-empirical relationships.

In that

Adventures Among Alarmists post I made a sort of hand-wavy claim of everything about forecasting (from data assimilation on through to predictive distributions) being one big ill-posed problem with noise. I think reading section 3 of this report will give you a flavor of what I mean. One minor quibble: hindcasts are an ok sort of 'sanity' check, but we should take care to

remember their dangers, and not mistake them for true validation. Taking too much confidence from hind-casts is a recipe for fooling ourselves.

The ensemble's skill changes with lead-time:

The skill increases for longer lead times, being larger for 6–10 years ahead than for 3–14 months or 2–5 years ahead. This is because the forced climate change signal, the sign of which is highly predictable, is greater at longer lead times.

This squares with the results discussed

over here about BMA in climate and weather forecasting. Depending on the time-frame at which you are looking to forecast, different model weightings, and spin-up times are optimal. This also goes to the problem about the past-performance / future-skill connection. Since the feedbacks are not stationary, models which performed well in the past won't tend to perform well in the future (eg. the BMA weighting changes through time).

Encouragingly, the multi-model ensemble mean, which consists of the average of twelve individual projections, gives somewhat higher scores than any of the individual models, whose projections are derived from three members with perturbed initial conditions.

So,

truth-centered or not?

The results also show that the skill increases for more recent hindcasts. In order to diagnose sources of skill, the blue curve of Figure 3.6 shows ensemble mean results from a parallel ensemble of ‘NoAssim’ hindcasts containing the same external forcing from greenhouse gases, sulphate aerosols, volcanoes and solar variations, but initialised from randomly selected model states rather than analyses of observations.

This seems like a reasonable use of hindcasts. Look for insight into the reasons particular models / realizations might have performed well on certain historical periods. They also found that initialization matters even with climate predictions (though their randomly selected initializations were pretty darn skill-full).

The product, decision support:

For many grid boxes there are significant probabilities of both drier and wetter future climates, and this may be important for impacts studies.

I think as regional projections start becoming more and more available, the extreme, alarmist policy prescriptions will be less and less well supported by a rational cost-benefit analysis.

The sensitivity study discussed in the report (done by

climateprediction.net) is worth noting simply for the fact that they found an interesting interaction (that's always a fun part of experimentation). Some of the criticism of this effort has focused on the plausibility of some of the parameter combinations in the thousands of runs of this computer experiment. The distinction to keep in mind is that this is a sensitivity study rather than a complete uncertainty quantification study.

Well it said in the executive summary that they generated 'qualitative PDFs', but it seems like section 3.3.2 is describing a quantitative Bayesian approach. They sample their parameter space with a variety of models with varying levels of complexity and then fit a simple surrogate (some people might call it a response surface) so that they can get approximate 'results' for the whole space. Then they get posterior probabilities by weighting expert obtained priors by likelihoods, a straight-forward application of

Bayes Theorem. That seems as quantitative as anyone could ask for, maybe I'm missing something?

They give a nice summary of the different types of uncertainties:

Also, the three techniques for sampling modelling uncertainty are essentially complementary to one another, so should not be seen as competing alternatives: the multi-model approach samples structural variations in model formulation, but does not systematically explore parameter uncertainties for a given set of structural choices, whereas the perturbed parameter approach does the reverse. The stochastic physics approach recognises the uncertainty inherent in inferring the effects of parameterised processes from grid box average variables which cannot account for unresolved sub-grid-scale organisations in the modelled flow, whereas the other methods do not. There is likely to be scope to develop better prediction systems in future by combining aspects of the separate systems considered in ENSEMBLES.

I think section 3 was the meat of the report (at least for what I'm interested in), so I'll stop with the commentary on that report there. I want to end with an answer to the question often suggested for dealing with us ornery skeptics, "What evidence would it take to convince you?" This is usually meant to be a jab, because obviously skeptics are really deniers in disguise and we couldn't possibly be reasonable or consider evidence (and it also displays one of the common fallacies of regarding disagreement about policy with ignorance of science, science demands nothing but a method). My answer is a simple one:

Rational policy tied to skillful prediction. By rational policy I mean it is foolish to focus on the cost-benefit of extreme events far out into the future weighed against mitigation today or tomorrow (because the tails of those future cost distributions are so uncertain, and the immediate costs of mitigation are relatively well known). It is far more rational to look at the near-term cost-benefit of adaption to climate changes (no matter their cause) and evolutionary improvements to our irrigation, flood management, public health and energy diversity problems. The skillfulness of near-term predictions can be reasonably validated, and then used to guide policy.

This should be a natural extension of the way we already make agriculture and infrastructure decisions based on weather predictions and an understanding of our current climate. There's no need for the slashing and burning of evil western capitalism (or whatever the rallying cry at Copenhagen was). The ability to skillfully predict changes further and further into the future can be gradually validated (by making a prediction and then waiting, sorry that's what seems reasonable to me) and then the results of those tools can be incorporated into policy decisions. Markets and people don't respond well to shocks. Gradualism may not be sexy, but it's smart.